Introduction

Artificial intelligence (AI) is no longer a futuristic concept; it’s here, and it’s shaping nearly every aspect of our lives. From voice assistants in our homes to complex algorithms managing financial markets, AI’s impact is undeniable. However, with great power comes great responsibility, and the rise of AI has sparked critical conversations about ethics, transparency, and accountability. In this article, we’ll explore these vital aspects and why they matter in today’s AI-driven world.

The Rise of AI in Modern Society

AI’s integration into our daily lives has been swift and profound. We see it in healthcare, where AI algorithms assist in diagnosing diseases, and in the automotive industry, where autonomous vehicles promise to revolutionize transportation. But as AI becomes more pervasive, the ethical implications become increasingly urgent.

The Importance of Ethical AI

Why Ethics Matter in AI Development

Ethics in AI isn’t just a buzzword—it’s a necessity. AI systems are only as good as the data they are trained on and the intentions behind their design. Without ethical considerations, AI can perpetuate biases, invade privacy, and even cause harm. Ensuring that AI is developed and used ethically is crucial for maintaining public trust and preventing negative outcomes.

The Consequences of Ignoring AI Ethics

Ignoring ethics in AI development can lead to severe consequences, from biased decision-making to catastrophic system failures. For example, if an AI system used in criminal justice is biased, it could result in unfair sentencing, exacerbating existing inequalities. The stakes are high, and the need for ethical AI has never been greater.

Understanding AI Transparency

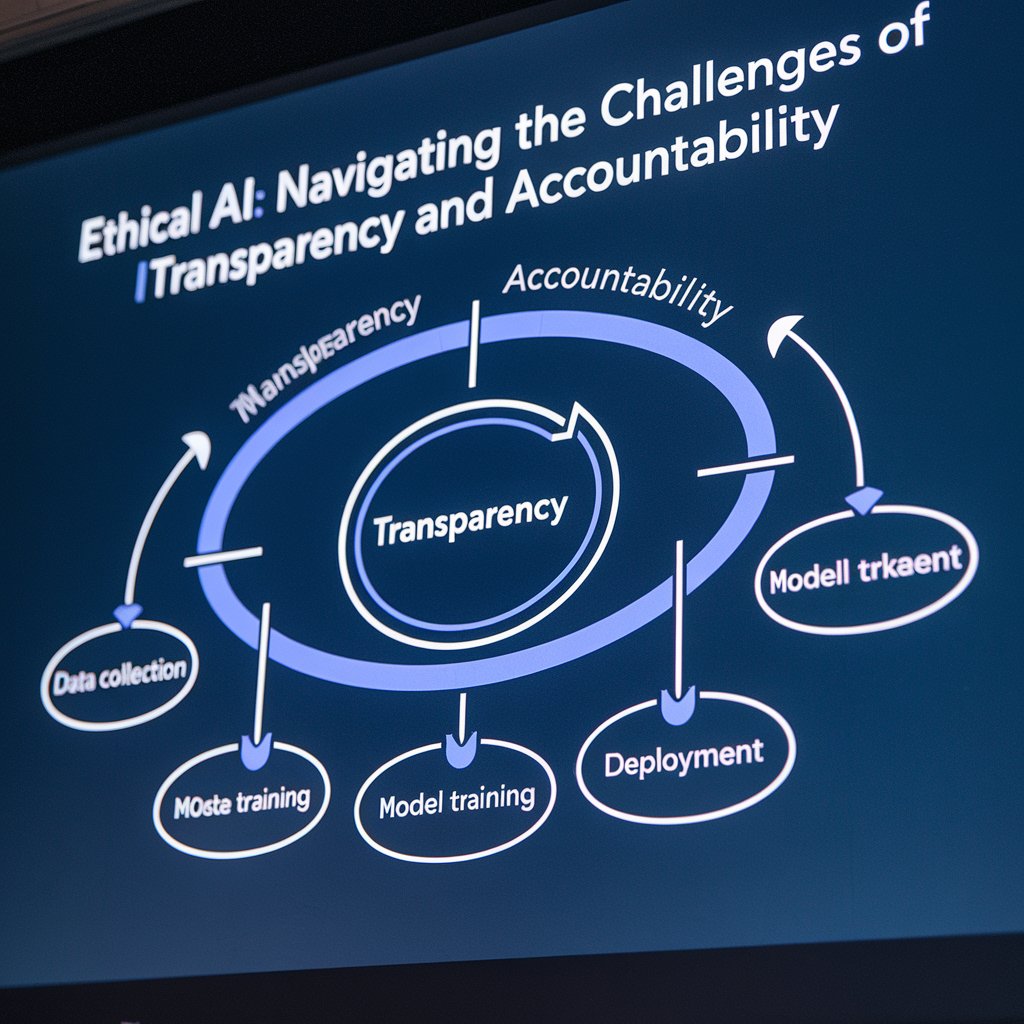

What is AI Transparency?

Transparency in AI refers to the clarity and openness with which AI systems operate. It involves making the processes and decision-making criteria of AI systems understandable to humans, ensuring that users and stakeholders know how and why a particular decision was made.

Defining Transparency in the Context of AI

In the context of AI, transparency means more than just explaining outcomes. It involves shedding light on the algorithms, data sources, and logic that drive AI systems. Without transparency, AI remains a “black box” where decisions are made without any insight into the process.

The Role of Transparency in AI Trustworthiness

Transparency is a cornerstone of trust. If users don’t understand how an AI system works, they’re less likely to trust it. This lack of trust can lead to resistance against AI adoption, even in cases where AI could provide significant benefits.

Challenges in Achieving AI Transparency

The Complexity of AI Systems

One of the biggest hurdles in achieving transparency is the sheer complexity of AI systems. Modern AI, particularly deep learning, involves layers of computations that are difficult to interpret, even for experts. This complexity makes it challenging to provide clear, understandable explanations for AI decisions.

Proprietary Algorithms and the Black Box Problem

Many AI systems are built using proprietary algorithms, which companies are often reluctant to disclose for competitive reasons. This creates a “black box” scenario where the inner workings of the AI are hidden, making transparency nearly impossible.

The Balance Between Transparency and Innovation

There’s also a delicate balance to strike between transparency and innovation. Full transparency might require disclosing trade secrets, which could stifle innovation and give competitors an edge. Companies must find ways to be transparent without sacrificing their competitive advantage.

The Accountability Aspect of AI

What Does AI Accountability Mean?

AI accountability refers to the responsibility of developers, users, and organizations to ensure that AI systems operate ethically and within legal frameworks. It’s about making sure that when AI makes a mistake, there is a clear path to address it and hold the appropriate parties accountable.

The Need for Clear Responsibility in AI Decisions

As AI systems take on more decision-making roles, it’s crucial to establish clear lines of responsibility. If an autonomous vehicle causes an accident, who is responsible—the manufacturer, the software developer, or the car owner? These are the kinds of questions that need clear answers.

Legal and Ethical Implications

The legal landscape surrounding AI is still evolving, but accountability is at the forefront of many discussions. There are ethical implications as well—AI systems must be designed in ways that allow for accountability, ensuring that they can be audited and corrected when necessary.

Challenges in Establishing AI Accountability

The Difficulty of Tracing AI Decisions

Tracing the decision-making process of an AI can be difficult, especially with complex algorithms. When something goes wrong, it’s not always easy to pinpoint where the issue occurred or who is responsible.

The Role of Human Oversight

Human oversight is essential for maintaining accountability in AI systems. While AI can handle many tasks autonomously, there must always be a human in the loop to ensure that the system’s decisions align with ethical and legal standards.

Accountability in Autonomous Systems

Autonomous systems, such as self-driving cars or drones, present unique challenges for accountability. These systems operate independently, often making decisions in real-time, which complicates the process of determining accountability when things go wrong.

Case Studies in AI Ethics

Examples of AI Transparency and Accountability in Action

Examining real-world examples helps illustrate the importance of transparency and accountability in AI.

AI in Healthcare

In healthcare, AI is used to assist in diagnosing patients and recommending treatments. Ensuring that these AI systems are transparent and accountable is critical, as lives are literally on the line.

AI in Autonomous Vehicles

Autonomous vehicles must be both transparent and accountable to gain public trust. When these vehicles make decisions—such as braking suddenly or changing lanes—those decisions need to be understandable and traceable.

AI in Financial Services

In financial services, AI systems are used for everything from credit scoring to detecting fraudulent transactions. Transparency and accountability are essential here to prevent bias and ensure fairness in financial decisions.

Strategies for Improving AI Transparency and Accountability

Promoting Ethical AI Design

To improve AI transparency and accountability, we must start at the design phase.

Incorporating Ethical Guidelines in AI Development

Developers should follow ethical guidelines from the outset, ensuring that AI systems are designed with transparency and accountability in mind.

Engaging Diverse Stakeholders

Involving a diverse range of stakeholders in AI development can help identify potential ethical issues and ensure that the AI system meets the needs of all users.

Regulatory Approaches to Ethical AI

Governments and organizations are beginning to recognize the need for regulations that promote ethical AI.

Existing AI Regulations

There are already some regulations in place aimed at ensuring AI transparency and accountability, such as the General Data Protection Regulation (GDPR) in the European Union.

The Need for New Legal Frameworks

However, existing regulations may not be enough. As AI continues to evolve, new legal frameworks will be necessary to address emerging challenges.

Technological Solutions to Enhance Transparency and Accountability

Technology itself can help address the challenges of transparency and accountability.

Explainable AI

Explainable AI (XAI) is a field focused on making AI systems more understandable to humans. XAI aims to develop methods that allow AI systems to explain their decisions in a way that people can understand.

Auditing AI Systems

Regular audits of AI systems can help ensure they are operating as intended and remain accountable. These audits should be thorough and conducted by independent parties.

AI Ethics Boards and Committees

Establishing AI ethics boards or committees within organizations can provide oversight and ensure that ethical considerations are prioritized in AI development and deployment.

The Future of Ethical AI

Emerging Trends in AI Transparency and Accountability

As awareness of AI ethics grows, new trends are emerging. These include increased emphasis on transparency and accountability, as well as the development of new tools and frameworks to support these goals.

The Role of Global Collaboration in AI Ethics

AI is a global phenomenon, and addressing its ethical challenges requires global collaboration. Countries and organizations must work together to establish standards and best practices for AI transparency and accountability.

Preparing for the Ethical Challenges Ahead

As AI continues to advance, new ethical challenges will undoubtedly arise. Preparing for these challenges requires ongoing vigilance, adaptation, and a commitment to ethical principles.

Conclusion

Recap of the Importance of Ethical AI

Ethical AI is not just a theoretical concept; it’s a practical necessity. Transparency and accountability are crucial for ensuring that AI systems are trusted and that they operate in ways that benefit society as a whole.

Final Thoughts on Navigating AI Challenges

Navigating the challenges of AI transparency and accountability is not easy, but it’s essential for the responsible development and use of AI. By prioritizing these values, we can harness the power of AI while minimizing its risks.

FAQs

What is the biggest challenge in AI transparency?

The biggest challenge in AI transparency is the complexity of AI systems. Many AI models, especially deep learning models, operate in ways that are difficult to interpret, making it hard to explain their decisions.

How can companies ensure AI accountability?

Companies can ensure AI accountability by incorporating ethical guidelines into AI development, maintaining human oversight, and regularly auditing their AI systems.

Are there any regulations governing AI ethics?

Yes, there are regulations like the GDPR that address aspects of AI ethics, particularly around data protection and transparency. However, more specific regulations are needed as AI technology evolves.

What is explainable AI?

Explainable AI (XAI) refers to methods and techniques that make the outputs of AI systems understandable to humans, helping to clarify how and why decisions are made.

Why is human oversight important in AI systems?

Human oversight is crucial in AI systems to ensure that the decisions made by AI align with ethical standards and can be corrected if they deviate from expected behavior.